Give Your App a Voice: A Guide to Integrating AI Voice APIs

Imagine your user is cooking, their hands are covered in flour, and they want to add milk to their shopping list app. They can’t touch the screen. What if they could just say, “Hey, Listify, add milk to my shopping list”? Just like that, it’s done.

This isn’t sci-fi; it’s the power of AI voice integration. By teaching your app to listen and speak, you’re not just adding a cool feature. You’re fundamentally changing how people interact with your software, making it more accessible, convenient, and genuinely helpful.This guide will walk you through how to integrate AI voice APIs into your app, skipping the dense jargon and focusing on what you, the developer, actually need to know.

Why Bother with Voice?

Before we get into the nuts and bolts, let’s talk about the “why.” What’s in it for you and your users?

- Make Your App a Joy to Use : Voice is natural. It allows for hands-free operation, making your app usable in more situations, from driving to multitasking in the kitchen.

- Open Doors with Accessibility : For users with visual impairments or motor disabilities, a voice interface isn’t just a convenience; it’s a gateway, making the digital world more accessible.

- Boost Engagement : Talking is more engaging than typing. Conversational interfaces can make users feel more connected to your app, encouraging them to use it more often.

- Stand Out from the Crowd : In a saturated app market, a well-implemented voice feature can be the unique selling point that makes users choose your app over a competitor’s.

The Toolkit: Types of AI Voice APIs

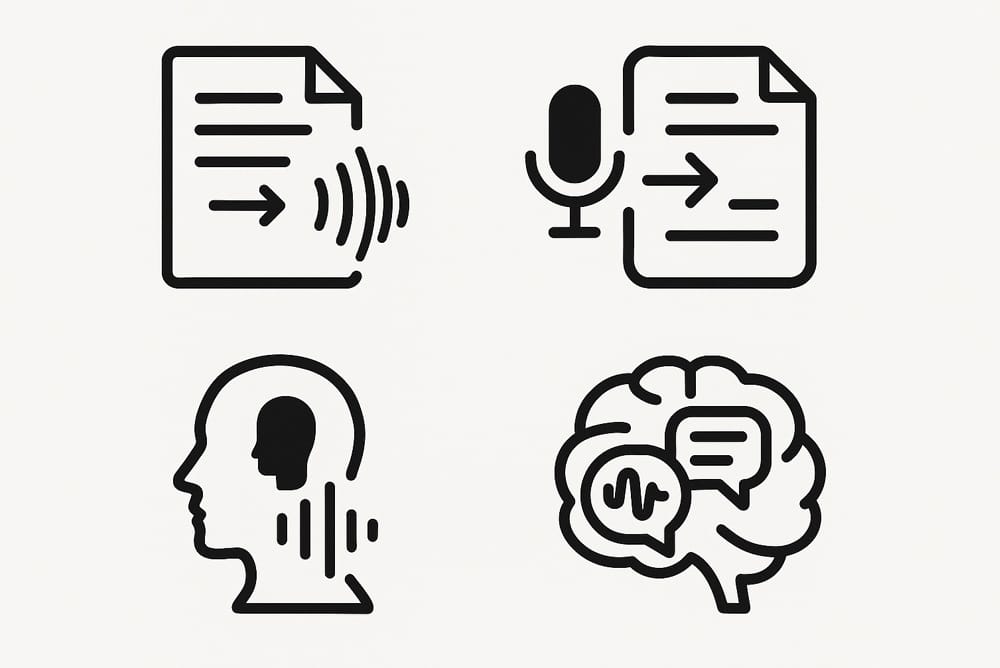

When we talk about “voice AI,” we’re talking about a few different tools. Knowing which tool does what is the first step.

- Text-to-Speech (TTS) : This is the “speaking” part. Your app writes text, and the API turns it into natural-sounding audio. Think of GPS directions or your app reading an article aloud.

- Speech-to-Text (STT) : This is the “listening” part. The user speaks, and the API converts their words into written text that your app can understand and process. This is the magic behind voice commands and dictation.

- Voice Recognition : This goes a step further than STT. It can identify who is speaking, which is great for security features or personalizing the user experience.

- Voice Cloning : This technology can create a synthetic voice that mimics a real human voice. It has niche but powerful applications in branding and personalized content.

- Natural Language Processing (NLP) : This is the “understanding” part. It’s the brain that figures out the intent behind the spoken words, allowing for a real conversation instead of just simple command-and-response.

You don’t have to build these complex systems from scratch. The good news is that many companies offer these services ready-made. You’ll find powerful options from the big players, such as Google Cloud Speech-to-Text and Amazon Polly. You can also explore the comprehensive Microsoft Azure Cognitive Services or the legendary AI of IBM Watson. For cutting-edge transcription, many developers turn to OpenAI’s Whisper API.

Your Step-by-Step Integration Plan

Ready to get your hands dirty? Here’s a practical roadmap.

Step 1: Start with a “What if…?”

Before writing a single line of code, define your goal. What is the experience you want to create?

- What if users could search for products just by speaking? (You’ll need STT).

- What if the app could read out new notifications? (You’ll need TTS).

- What if I could build a chatbot that answers customer questions? (You’ll need STT, NLP, and TTS).

A clear use case is your North Star. It dictates which APIs you need and how they should work together.

Step 2: Choose Your Voice Provider

With your goal in mind, it’s time to shop around. When evaluating providers, ask yourself:

- Quality : How human-like does it need to sound? Do you need different voices, languages, or accents?

- Speed : Does it need to be instant (for a real-time conversation) or can there be a slight delay (for transcribing a recorded meeting)?

- Cost : What’s your budget? Pricing models vary, from pay-as-you-go to monthly subscriptions.

- Support : How good is the documentation? Is there a strong developer community to help if you get stuck?

Step 3: Getting Your Hands Dirty (The Code Part)

Once you’ve picked a provider, it’s time to wire it up.

- Get Your Keys : Sign up for the service and get your API keys. Think of these as the password your app uses to access the service. Keep them safe!

- Install the SDK : Most providers offer an SDK (Software Development Kit) for popular languages like Python, Node.js, or Swift. This makes your life easier by handling a lot of the boilerplate code.

- Make the Call : Using the SDK, you’ll write the code to send data (either text to be spoken or an audio file to be transcribed) to the API and receive the result.

- Handle the Response : Your app gets the data back from the API—either the transcribed text or the synthesized speech audio. Now you just need to display it, play it, or act on it.

Step 4: Test in the Real World

Testing on your high-end microphone in a quiet room is not enough. You need to test for reality:

- Different accents and speaking styles.

- Noisy environments (cafes, streets, cars).

- Low-quality phone microphones.

- How your app handles errors when the API can’t understand the speech.

The more diverse your testing, the more robust your feature will be.

A Quick Word on Trust and Security

When your app listens, you are handling user data. This is a big responsibility.

- Be Transparent : Tell your users exactly what you’re recording and why. Your privacy policy should be clear and easy to understand.

- Encrypt Everything : Ensure all data sent to and from the API is encrypted.

- Follow the Rules : Be aware of data privacy laws like GDPR and CCPA. They are there to protect users, and following them protects you.

Pro-Tips for a Great Voice Experience

- Start Small : Build a Minimum Viable Product (MVP) to test your core idea before investing heavily.

- Listen to Your Users : Gather feedback constantly. How are people actually using the feature? What frustrates them?

- Provide Fallbacks : What happens if the voice command fails? Always give the user a way to complete the action through the traditional touch interface.

- Stay Updated : AI tech moves fast. Keep an eye on your provider’s updates to bring the latest improvements to your users.

The Future is Talking

Integrating voice APIs is more than just a technical task; it’s about creating a new kind of relationship with your users. By giving your app a voice, you make it a more intuitive, accessible, and indispensable part of their lives. The learning curve is worth it, and by starting today, you’re building an app that’s ready for the future of human-computer interaction.